Enhanced Detection of Hateful Memes Using Multimodal Learning

Co-authored with Kanishk Mittal

Introduction

In today’s digital landscape, detecting and mitigating the spread of hateful memes is crucial. These memes, often characterized by derogatory and inflammatory content, can perpetuate harmful stereotypes, incite violence, and contribute to digital toxicity. Despite efforts to censor such content, existing methods struggle with effectively identifying and addressing hateful memes due to the inherent complexities of multimodal data analysis.

The Challenge

Hateful memes combine nuanced, context-heavy text with imagery, posing a significant technical challenge. Traditional machine learning techniques lack the sophistication needed to accurately analyze this multimodal data. These methods often fail to effectively integrate textual and visual features, resulting in poor performance and a high rate of false positives. Furthermore, the reliance on extensive computational resources for running and deploying these large-scale transformer-based models is a challenge in real-world scenarios.

Our Approach: ConcatNet

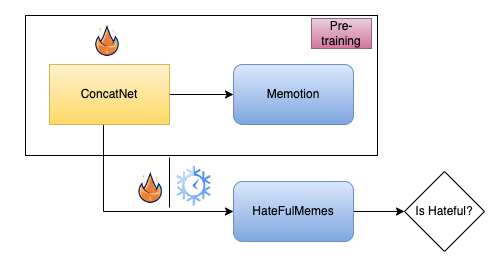

To address these challenges, we introduced a novel concatenated image + text encoder architecture called ConcatNet. This architecture integrates advanced text encoders like RoBERTa and pre-trained multimodal networks like CLIP embeddings to enhance the understanding of images. Additionally, we improve the training process with additional image captioning data.

ConcatNet is designed to be extremely easy to set up and allows experimentation with different combinations of text and image encoder models. In our ablation study, we demonstrated how pre-training our model on a dataset catered to a similar task in the same domain significantly improves classification performance.

Datasets

Our research utilised two primary datasets:

- Hateful Memes Dataset: Released by Facebook AI, this dataset consists of over 10,000 meme images annotated with text and categorized into “Hateful” or “Not Hateful”. It is structured into a training set with 8,500 memes, a validation set with 500 memes, and a test set with 2,000 memes.

- Memotion Dataset: Curated for sentiment analysis, emotion detection, and humor classification tasks, this dataset was introduced at the “SemEval-2020 Task 8: Memotion Analysis” competition. It focuses on identifying whether memes are not offensive, slightly offensive, or highly offensive.

Experimental Setups

We explored two main experimental setups in our project:

1. ConcatNet: A simple concatenated architecture with an image model (CLIP) as an image encoder and a text model (BERT or RoBERTa) as the text encoder. We conducted multiple experiments with frozen and unfrozen weights of the pre-trained models to test their effectiveness in capturing contextual and spatial information.

2. Pre-trained Setup: We pre-trained our concatenated architecture on the Memotion dataset, which is similar to the Hateful Memes dataset. This pre-training provided our architecture with better weight initialisation for downstream classification tasks on the Hateful Memes dataset.

Model Architectures

We developed several specific neural network architectures using CLIP for image encoding and transformer models like BERT and RoBERTa for text encoding. Variations included:

- Clip2Clip: Both image and text components are encoded using CLIP, tuned for both modalities.

- ClipBertLarge and ClipBertUnfrozen: Use CLIP for image encoding and BERT for text encoding, with the “Unfrozen” model allowing both encoders to be trainable.

- Clip+RoBERTa with Projection: Uses RoBERTa for text encoding along with a linear projection layer.

Key Findings

Pre-processing

For text, we implemented a comprehensive pre-processing pipeline using libraries like spacy, contractions, and demoji to perform the following cleaning steps:

- Remove URLs: Eliminates substrings beginning with “http” to clean the text.

- Text Normalization: Converts text into a consistent format using Unicode normalization.

- Handle Emoji: Replaces emojis with corresponding English words.

- Remove Stopwords: Filters out common English stopwords using NLTK’s list.

- Replace Abbreviations: Expands contractions and common abbreviations to their full form.

- Remove Punctuations: Strips punctuation, keeping only alphanumeric characters and spaces.

- Map Chat Words: Translates chat shorthand (like “lol” or “brb”) into full phrases.

- Lemmatization: Reduces words to their base or dictionary form.

- Word Tokenizer: Splits the text into a list of words (tokens).

For images, we introduced augmentations like resizing, random horizontal flipping, random grayscale conversion, and tensor transformation to enhance the robustness of the model.

Training and Optimization

We meticulously selected hyperparameters to optimize performance, using PyTorch as the deep learning framework and huggingface transformers for pre-trained models and tokenizers. Our experiments employed the Adam optimizer and Cross Entropy loss function, with detailed configurations for batch sizes, learning rates, and logging. This setup allowed us to fine-tune the models effectively and track performance metrics accurately.

Experimentation Results

- Unfrozen CLIP and BERT Encoders: Showed high training accuracy (90.4%) but severe overfitting with lower validation and test performance (around 60%).

2. Frozen Dual CLIP Encoders: Slight improvement in test accuracy (62.23%), indicating the need for more flexible adaptation strategies.

3. Frozen CLIP and RoBERTa Encoders: Slight improvement in validation and test scenarios, highlighting the benefits of diverse training backgrounds for generalization.

4. Frozen CLIP and BERT Encoders: High training accuracy (92.34%) but significant drop in validation and test performance, emphasizing the need for trainable flexibility.

5. Unfrozen CLIP and BERT Encoders (Pre-trained on Memotion): Significant improvement in test accuracy (89.82%), demonstrating the benefits of model adaptability and pre-training.

vs. unfreezing model encoders.

The black line denotes experiment with the configuration (Unfrozen CLIP & BERT) and the blue line denotes experiment with the configuration Frozen CLIP & BERT)

Conclusion

Our experiments highlights the critical importance of leveraging pre-trained models and enabling adaptability for nuanced tasks like hateful meme detection. Pre-training on a relevant dataset (Memotion) and fine-tuning on the target dataset (Hateful Memes) proved highly effective, resulting in marked improvements in model performance.

By developing robust and efficient detection mechanisms, our research contributes to better content moderation and fosters a safer online environment. This approach has the potential to reduce the propagation of hateful ideologies, promote constructive conversations, and safeguard marginalized communities from online harassment and discrimination.